Simply as there are broadly understood empirical legal guidelines of nature — for instance, what goes up should come down, or each motion has an equal and reverse response — the sphere of AI was lengthy outlined by a single thought: that extra compute, extra coaching information and extra parameters makes a greater AI mannequin.

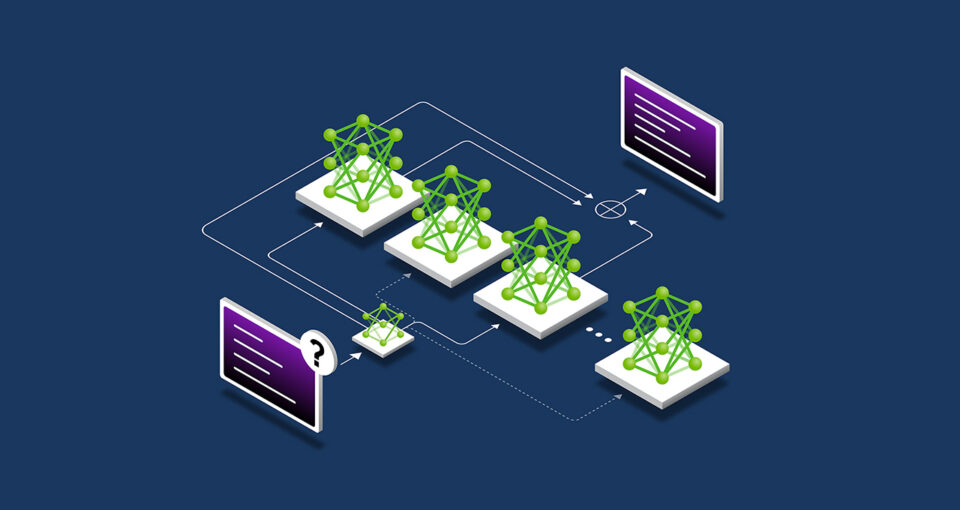

Nonetheless, AI has since grown to want three distinct legal guidelines that describe how making use of compute assets in numerous methods impacts mannequin efficiency. Collectively, these AI scaling legal guidelines — pretraining scaling, post-training scaling and test-time scaling, additionally referred to as lengthy pondering — mirror how the sphere has advanced with methods to make use of further compute in all kinds of more and more complicated AI use circumstances.

The current rise of test-time scaling — making use of extra compute at inference time to enhance accuracy — has enabled AI reasoning fashions, a brand new class of huge language fashions (LLMs) that carry out a number of inference passes to work by means of complicated issues, whereas describing the steps required to unravel a process. Take a look at-time scaling requires intensive quantities of computational assets to help AI reasoning, which can drive additional demand for accelerated computing.

What Is Pretraining Scaling?

Pretraining scaling is the unique legislation of AI improvement. It demonstrated that by rising coaching dataset dimension, mannequin parameter rely and computational assets, builders may anticipate predictable enhancements in mannequin intelligence and accuracy.

Every of those three components — information, mannequin dimension, compute — is interrelated. Per the pretraining scaling legislation, outlined on this analysis paper, when bigger fashions are fed with extra information, the general efficiency of the fashions improves. To make this possible, builders should scale up their compute — creating the necessity for highly effective accelerated computing assets to run these bigger coaching workloads.

This precept of pretraining scaling led to massive fashions that achieved groundbreaking capabilities. It additionally spurred main improvements in mannequin structure, together with the rise of billion- and trillion-parameter transformer fashions, combination of consultants fashions and new distributed coaching methods — all demanding vital compute.

And the relevance of the pretraining scaling legislation continues — as people proceed to supply rising quantities of multimodal information, this trove of textual content, photographs, audio, video and sensor info might be used to coach highly effective future AI fashions.

What Is Put up-Coaching Scaling?

Pretraining a big basis mannequin isn’t for everybody — it takes vital funding, expert consultants and datasets. However as soon as a corporation pretrains and releases a mannequin, they decrease the barrier to AI adoption by enabling others to make use of their pretrained mannequin as a basis to adapt for their very own functions.

This post-training course of drives further cumulative demand for accelerated computing throughout enterprises and the broader developer neighborhood. Common open-source fashions can have a whole bunch or hundreds of by-product fashions, skilled throughout quite a few domains.

Creating this ecosystem of by-product fashions for quite a lot of use circumstances may take round 30x extra compute than pretraining the unique basis mannequin.

Creating this ecosystem of by-product fashions for quite a lot of use circumstances may take round 30x extra compute than pretraining the unique basis mannequin.

Put up-training methods can additional enhance a mannequin’s specificity and relevance for a corporation’s desired use case. Whereas pretraining is like sending an AI mannequin to high school to be taught foundational expertise, post-training enhances the mannequin with expertise relevant to its meant job. An LLM, for instance, could possibly be post-trained to sort out a process like sentiment evaluation or translation — or perceive the jargon of a selected area, like healthcare or legislation.

The post-training scaling legislation posits {that a} pretrained mannequin’s efficiency can additional enhance — in computational effectivity, accuracy or area specificity — utilizing methods together with fine-tuning, pruning, quantization, distillation, reinforcement studying and artificial information augmentation.

- Effective-tuning makes use of further coaching information to tailor an AI mannequin for particular domains and functions. This may be carried out utilizing a corporation’s inside datasets, or with pairs of pattern mannequin enter and outputs.

- Distillation requires a pair of AI fashions: a big, complicated trainer mannequin and a light-weight scholar mannequin. In the commonest distillation method, referred to as offline distillation, the scholar mannequin learns to imitate the outputs of a pretrained trainer mannequin.

- Reinforcement studying, or RL, is a machine studying method that makes use of a reward mannequin to coach an agent to make choices that align with a selected use case. The agent goals to make choices that maximize cumulative rewards over time because it interacts with an surroundings — for instance, a chatbot LLM that’s positively strengthened by “thumbs up” reactions from customers. This system is named reinforcement studying from human suggestions (RLHF). One other, newer method, reinforcement studying from AI suggestions (RLAIF), as an alternative makes use of suggestions from AI fashions to information the educational course of, streamlining post-training efforts.

- Finest-of-n sampling generates a number of outputs from a language mannequin and selects the one with the best reward rating based mostly on a reward mannequin. It’s typically used to enhance an AI’s outputs with out modifying mannequin parameters, providing a substitute for fine-tuning with reinforcement studying.

- Search strategies discover a variety of potential determination paths earlier than choosing a last output. This post-training method can iteratively enhance the mannequin’s responses.

To help post-training, builders can use artificial information to reinforce or complement their fine-tuning dataset. Supplementing real-world datasets with AI-generated information may also help fashions enhance their capability to deal with edge circumstances which are underrepresented or lacking within the unique coaching information.

What Is Take a look at-Time Scaling?

LLMs generate fast responses to enter prompts. Whereas this course of is nicely fitted to getting the suitable solutions to easy questions, it could not work as nicely when a consumer poses complicated queries. Answering complicated questions — a necessary functionality for agentic AI workloads — requires the LLM to purpose by means of the query earlier than arising with a solution.

It’s much like the way in which most people suppose — when requested so as to add two plus two, they supply an on the spot reply, without having to speak by means of the basics of addition or integers. But when requested on the spot to develop a marketing strategy that might develop an organization’s earnings by 10%, an individual will possible purpose by means of numerous choices and supply a multistep reply.

Take a look at-time scaling, often known as lengthy pondering, takes place throughout inference. As an alternative of conventional AI fashions that quickly generate a one-shot reply to a consumer immediate, fashions utilizing this system allocate further computational effort throughout inference, permitting them to purpose by means of a number of potential responses earlier than arriving at the perfect reply.

On duties like producing complicated, custom-made code for builders, this AI reasoning course of can take a number of minutes, and even hours — and might simply require over 100x compute for difficult queries in comparison with a single inference cross on a standard LLM, which might be extremely unlikely to supply an accurate reply in response to a posh drawback on the primary attempt.

This AI reasoning course of can take a number of minutes, and even hours — and might simply require over 100x compute for difficult queries in comparison with a single inference cross on a standard LLM.

This test-time compute functionality allows AI fashions to discover completely different options to an issue and break down complicated requests into a number of steps — in lots of circumstances, displaying their work to the consumer as they purpose. Research have discovered that test-time scaling ends in higher-quality responses when AI fashions are given open-ended prompts that require a number of reasoning and planning steps.

The test-time compute methodology has many approaches, together with:

- Chain-of-thought prompting: Breaking down complicated issues right into a sequence of easier steps.

- Sampling with majority voting: Producing a number of responses to the identical immediate, then choosing essentially the most ceaselessly recurring reply as the ultimate output.

- Search: Exploring and evaluating a number of paths current in a tree-like construction of responses.

Put up-training strategies like best-of-n sampling will also be used for lengthy pondering throughout inference to optimize responses in alignment with human preferences or different targets.

How Take a look at-Time Scaling Allows AI Reasoning

The rise of test-time compute unlocks the power for AI to supply well-reasoned, useful and extra correct responses to complicated, open-ended consumer queries. These capabilities might be important for the detailed, multistep reasoning duties anticipated of autonomous agentic AI and bodily AI functions. Throughout industries, they might enhance effectivity and productiveness by offering customers with extremely succesful assistants to speed up their work.

In healthcare, fashions may use test-time scaling to research huge quantities of information and infer how a illness will progress, in addition to predict potential issues that might stem from new therapies based mostly on the chemical construction of a drug molecule. Or, it may comb by means of a database of scientific trials to counsel choices that match a person’s illness profile, sharing its reasoning course of concerning the professionals and cons of various research.

In retail and provide chain logistics, lengthy pondering may also help with the complicated decision-making required to deal with near-term operational challenges and long-term strategic targets. Reasoning methods may also help companies scale back danger and handle scalability challenges by predicting and evaluating a number of eventualities concurrently — which may allow extra correct demand forecasting, streamlined provide chain journey routes, and sourcing choices that align with a corporation’s sustainability initiatives.

And for international enterprises, this system could possibly be utilized to draft detailed enterprise plans, generate complicated code to debug software program, or optimize journey routes for supply vans, warehouse robots and robotaxis.

AI reasoning fashions are quickly evolving. OpenAI o1-mini and o3-mini, DeepSeek R1, and Google DeepMind’s Gemini 2.0 Flash Pondering had been all launched in the previous few weeks, and extra new fashions are anticipated to observe quickly.

Fashions like these require significantly extra compute to purpose throughout inference and generate right solutions to complicated questions — which implies that enterprises have to scale their accelerated computing assets to ship the following technology of AI reasoning instruments that may help complicated problem-solving, coding and multistep planning.

Find out about the advantages of NVIDIA AI for accelerated inference.